How Do Analytics Professionals Explain AI / ML to the Non-Technical User Community?

How far have we traveled in the past few decades from a Decision Tree tool to Generative AI? As a veteran in this space, I studied economics in college and programmed in SAS, a statistical modeling tool. My colleagues in the 1990s were familiar with decision trees, linear or logistic regression models, and occasionally neural networks models.

Today, analytics professionals have many more tools and much more powerful solutions at their disposal. On top of the list recently is Generative AI. Even as many business leaders move to take advantage of Generative AI (enthusiastically, but responsibly), to most people, the technology itself remains an enigma.

Evolution of Techniques

We can describe the spectrum of all aspects and connect the dots:

- Statistical predictive models (machine learning models), using manual coding such as SAS or R.

- Automatic Machine Learning (AutoML) tools such as Alteryx, DataRobot, and Dataiku, with low-code or no-code solutions.

- Neural Networks models.

- Natural Language Processing (NLP).

- Large Language Models (LLMs).

- Other types of AI methods.

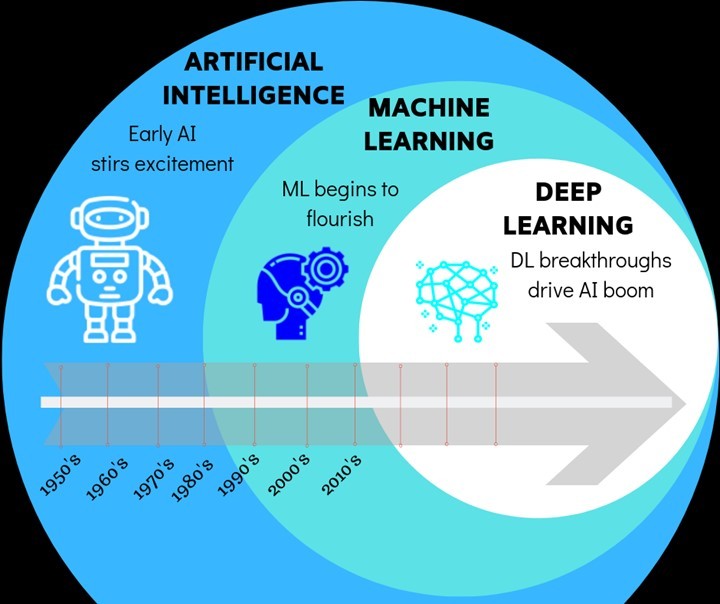

The accelerated progression in the past decades has been a result of two factors: plummeting cost of computing (on speed, volume, and scale) and advancement on transformer techniques.

Please refer to the following illustration on AI, ML, and Deep Learning (LLM, GenAI).

From Machine Learning to Generative AI

Analytics professionals are familiar with the standard algorithm or statistical models: offline coded, static machine learning (ML), and needing frequent validation and recalibration. Examples include classification and regression tree (CART or decision tree), principal component analysis (PCA), linear or logistic regression, and static neural networks (artificial neural network, or ANN). These techniques started in the 1950s to 1970s and were widely used in the 1990s by database predictive modeling teams at major credit card companies such as MBNA, Capital One, First USA, and Advanta.

In the last 15 years, Automated Machine Learning (AutoML) is the most widely applied form of artificial intelligence. It utilizes computer models that can adapt and evolve (or “learn”) without being explicitly programmed.

Generative AI grew out of these systems. They became popular in 2014 and more so in recent years. They create new content rather than analyzing existing data. The most relevant of these tools is “large language models” (LLMs). LLMs are all neural networks, a technology that is over 50 years old.

LLMs can read and write in natural languages, like English. They are what allow GenAI tools to write those sonnets, draft those speeches, and even pass the bar.

During the recent #EverlawSummmit, Everlaw Founder and CEO, AJ Shankar, sought to demystify the workings of GenAI, how we got to the technology we have today, and where GenAI-powered legal tools may be headed in the future.

AJ stated the following four core competencies of LLMs:

- Fluency: LLMs can read and write in English and other languages, often with better grammar than most of us.

- Creativity: These tools can create truly novel connections and ideas, whether analogies, poetry, or entirely new concepts.

- Knowledge: By filling in the blank in billions of sentences of training data, LLMs internalize all the knowledge contained in them.

- Logical reasoning: These tools can make inferences and connect the dots in ways few anticipated.

Read the original blog: Demystifying Generative AI at Everlaw Summit

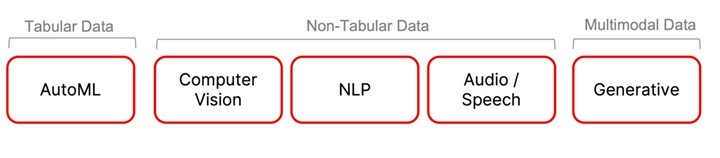

The Five “(Narrow) AI” Archetypes

Thanks to Tobias Zwingmann for his insights on the five AI archetypes, which you can explore in his blog post .

The term AI (Artificial Intelligence) has been around since the mid-1950s. Since then, two main types of AI research areas have emerged: Artificial General Intelligence (AGI) and Narrow AI.

AGI aims to develop intelligent systems capable of solving any task they encounter — much like human intelligence. Narrow AI, on the other hand, refers to systems trained for specific tasks. Currently, all AI applications in the business world are instances of Narrow AI.

The five archetypes of Narrow AI and their corresponding data types are:

- AutoML (automated machine learning) – Tabular data.

- Computer Vision – Non-tabular data.

- Natural Language Processing (NLP) – Non-tabular data.

- Audio / Speech – Non-tabular data.

- Generative AI (GenAI) – Multimodal data.

Analytics Talents with Core Skill Sets

It is important for analytics professionals to understand the evolutionary history of the tools and techniques we have been using, so we are not surprised to see the accelerated changes in technologies and techniques. Our focus remains on leveraging technology, techniques, and data to solve real-world problems and deliver actionable insights.

The core skill sets for all analytics and AI talents have been fundamentally the same and will stay consistent in the future:

- Problem solving, curiosity, open-mindedness, and continuous learning.

- Mathematics, statistics, economics, econometrics, and engineering.

- Programming (human-machine interactions), increasingly simplified through low-code and no-code tools.

- Pattern detection, adaptive algorithm-guided decision making, and automation.

By understanding the foundations of AI, we can rationally and logically analyze our pain points, avoid making rash decisions, minimize potential waste or loss due to “irrational exuberance” (AI hype), and maximize the return on investment for analytics and AI.